Free Hosting Everything + Ethical Heroku Warmups

Problem

I host several small projects in different places. My first hosting was AWS back in 2010. It was amazing having something that cheap, though it was an unintuitive interface for the uninitiated.

There are other services now. Google Cloud and Azure are at the AWS level. Then there are services built on top of those. Three in particular come to mind: surge, ZEIT now, and original leader in this genre, heroku.

When Heroku came out with their free server hosting a few years back, with its beautiful git-remote push-to-deploy model, it made a pretty big impact. I pushed my first project up there in 2015.

Heroku is great, but of course they’re not cheap–that beautiful toolchain is worth charging for. On the other hand, they have a free service, but it comes with a different cost: the VM running your server is put on ice when it hasn’t been accessed in the last hour. The next access leads to it being thawed, and it takes 10-20 seconds before that initial page load works out.

10-20 seconds is a long time, on the internet.

Naturally, it wasn’t long before someone threw up a free service, hosted on heroku, that would ping any website you entered often enough to prevent it ever getting put to sleep. This means your site is highly responsive, always, for free!

…Well, sort of. Someone pays the cost. And it’s Heroku, and thus the paying customers of Heroku.

It’s kind of terrible to repay someone giving you a free service by abusing it that way, and that’s a good way to get that service taken away. It slows down the free service for everyone, and raises the prices for everyone who pays.

What’s an ethical programmer to do?

Solution

Since my projects are not important, and are mostly there for public demos that most users will only access through links on my personal site, I just threw a small chunk of client-side code on my main page to ping my heroku dynos and wake them up.

Since my main site (like this blog) is static, client-side-only code, I can host that for free on Surge with high responsiveness. When someone visits kylebaker.io, the client taps the dynos on the shoulder, warming them up in case someone visits by following links on my site, which is the most likely scenario.

Here’s what that code looks like (because it’s a static site with no libraries, and it would be absurd to add something like jquery just for this, we’ll write the query in raw JS):

1 | <script> |

Note that we’re not handling the response, since we don’t care about the response.

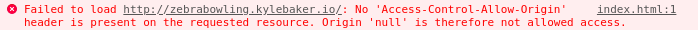

Of course, we’ll get a nasty CORS error in the console anyways–and sadly, there’s no way to catch that error. Security choice on Chrome’s part.

Not a big deal… unless you’re a programmer and expect people to open the console and poke around in your site. :)

Luckily, the fix is fairly simple. Enable CORS for a single endpoint on your heroku projects. If we’re running a small node.js server with express.js, you just need to yarn add cors, and then add something like this to your server code:

1 | var cors = require('cors'); |

Now just go back and add /warmup to the URLs in your sites array in the client code, and you’ll get lovely, quiet wake-ups for your Heroku dynos in the most ethical way possible.

This isn’t perfect. People might bookmark your heroku sites, or get there from your online git repos directly, or directly from a project link on your resume. I’m happy enough assuming they’ll visit my home page first, though, and you can direct links through that avenue to maximize this result.

I tried ZEIT’s now service, which is like a hybrid of Surge’s beautiful minimalist command-line deployment interface and Heroku’s free server-side hosting, but they of course do the same dyno-sleeping Heroku does for free plans. They also have a 1mb file limit, 100mb project limit, and seem to be a little slower than Heroku in my tests. Still, I’ll keep my eye on them in the future, they seem like a great company and they’re improving rapidly–I watched them respond to issues in their slack channel, and I was really impressed with the team. They also, for instance, give free hosting to jsperf–they’re clearly interested in giving back to the community, and their deploy process is beautiful. Unfortunately, free custom subdomains are a priority for me, which, unlike heroku and surge.sh, they don’t offer–otherwise, I’d likely be using them for zebrabowling.

That said, they could be a great option for development purposes. Keep them in mind.

Next Level Heroku Hack

Is your Heroku project a little more important, or have a much higher liklihood of being arrived at directly? You can take this principle to the next level by combining strategies. Serve a landing page, a login page, etc.–or better yet, the front-end code separated from the back end code–from surge, and only host the custom server functionality you need on heroku. Ping the heroku server fron the surge client. That way you get a fast load, and throw something at the visitor to keep them busy while heroku warms up. Done intelligently, they’ll never notice–you only have to keep them occupied for 20 seconds at most for this sleight of hand to work.

This will mean a more complex deploy process, and you’ll have to manage CORS, but hey–you’re getting it all for free, what do you have to complain about? Besides, this encourages the good practice of keeping your server code and client code siloed separately.

Doe you feel some sense of faint familiarity? You’re actually only one step away from a microservice or serverless architecture, depending on your server needs.

More Power, More Complexity

There is another free option, which is arguably the “best”: Google Cloud Service’s free tier, which gives us an f1 micro for free.

Unlike AWS, which is free for a year and then just really cheap, Google Cloud now offers a free-forever level of service, which includes enough hours of service per month to keep an always-awake dyno of their lowest offered spec running non-stop, month after month. While my earlier projects mindseal and Zebra Bowling are both hosted on Heroku because they predate Google Cloud’s free tier, I only recently got around to deploying zipcoder, and because of the 70mb JSON file I use in that project, decided to go ahead and deploy it there to try out their new service.

It was a much larger hassle to get up and running than something like zeit, but once you have it up it’s an always-awake dyno. Eventually I may move all my projects to that little dyno. The deployment is a little more manual, but I could of course automate that if I wanted to with a little more work myself (so, manual automation vs. automatic automation?). Still, it’s just a few commands: git push, ssh, cd project git pull pm2 restart (extremely abbreviated, but you get the idea).

The main limitation for most users, I think, will be the 600mb of ram. Zipcoder uses about 150mb~ because it stores a tens of thousands of zipcode polygons as millions of coordinates in GeoJSON in memory in lieu of a database for both simplicity and speed, but mindseal and Zebra Bowling are likely sub-20mb node processes. I have nginx reverse proxying and pm2 managing my node process, so it would be relatively trivial to get two more apps up there. Of course, that said, you have to set all that up and manage it yourself.

Free Now, Built To Scale

Google Cloud Services’ free tier actually gives you many other things for free, as well.

If I wanted to free up that f1-micro, I could turn my zipcoder project into a true microservice architecture. To do this for free, we’d use GCS’ “Functions” (their equivalent of AWS’ lambda) service, and their Datastore service (their free NoSQL service). You get 2 million invocations/1 million seconds with the Functions service per month, and with Datastore you get 1 GB of NoSQL DB space and 50k/20k read/write per month.

Conclusion

The tradeoffs here are speed & availability, simplicity, automation, and design complexity.

You: Want the easiest option (that’s also pretty fast and highly available)?

Answer: Make it as a pure front-end static site and host it on surge.

You: But I need a server, a static site won’t cut it for me.

Answer: Heroku can do it all, but it’ll be slow for initial start up.

You: That slow initial load is a deal-breaker, but I love the easy deployment tools heroku gives me.

Answer: Mix it up–handle CORS, host the front-end on surge, only host the custom server stuff on heroku.

You: Hmmm… I don’t like the idea of separate deploys like that, I’d prefer to keep it all together.

Answer: Google Cloud Services f1-micro is a straight-up free server, with constant availability.

You: But I can only have one f1-micro, and the ram is pretty small, it’ll fall over under load, etc… I want more.

Answer: Break your project up properly. Use microservices with Google Cloud Services Functions and store stuff in Datastore. Free to start, and built to auto-scale from the ground up.

You: Microservices are a bit too agressively small, I have some more serious crunching to do

Answer: There’s an in-between service that blurs the lines from Google you might be interested in–the f1-micro is a part of Google Compute Engine, but there’s another service in between that and Google Function, and that’s Google App Engine. For storage, too, they have various options, and a substantial amount of space free right off the bat.

Whatever your needs, using these resources and strategies, you should be able to get any project off the ground.